Comprehensive Guide to LangChain: Installation, Usage, and Applications in Python

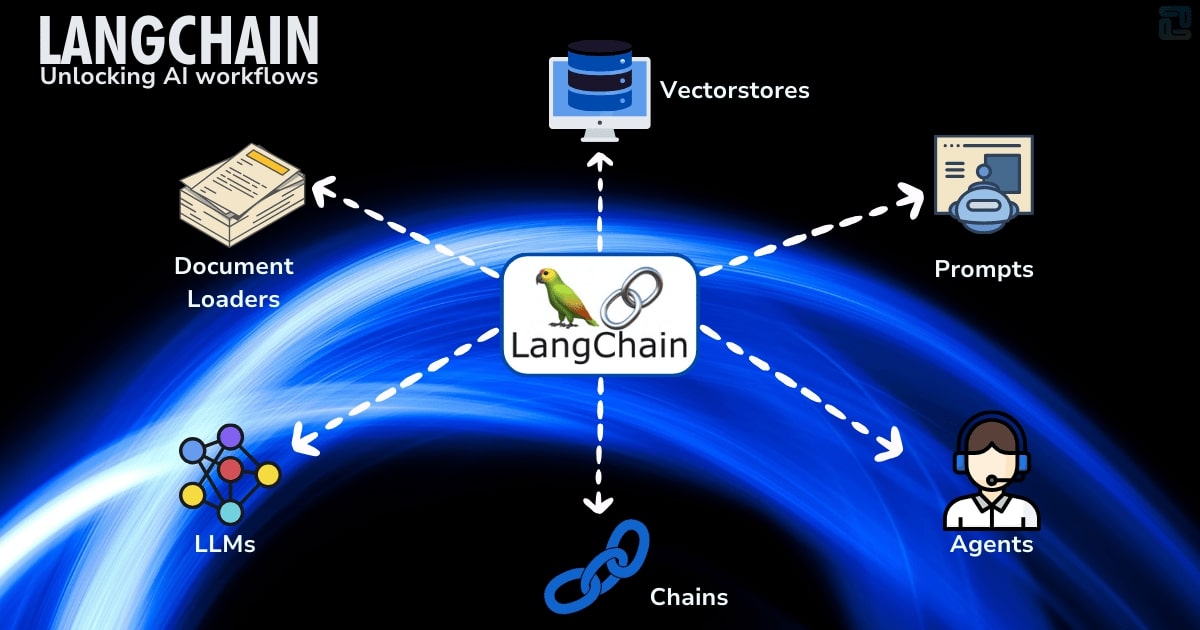

LangChain is an advanced framework that simplifies the process of working with large language models (LLMs) and enhances their integration into applications. It allows developers to combine different components such as memory, chains, tools, and agents to build sophisticated workflows.

What is LangChain?

LangChain is a modular framework designed to enhance the capabilities of LLMs by enabling structured, multi-step workflows. It supports a range of use cases, including text generation, question answering, summarization, and chatbot development.

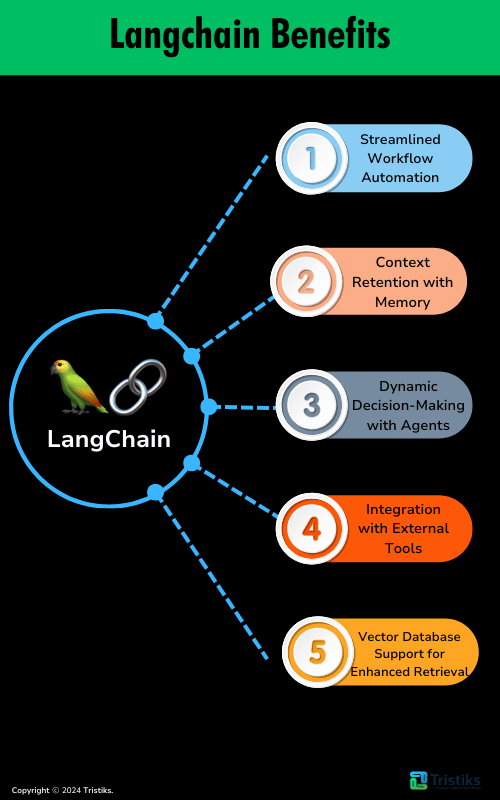

Benefits of LangChain

LangChain is a powerful framework designed to simplify and enhance the use of large language models (LLMs). It offers a modular, scalable, and flexible way to create sophisticated AI workflows. Below are the key benefits:

Streamlined Workflow Automation

- LangChain allows the seamless chaining of multiple steps, enabling automated workflows for tasks like document summarization, question generation, and more.

Context Retention with Memory

- Its memory feature maintains conversation history or contextual data, essential for building coherent and responsive chatbots.

Dynamic Decision-Making with Agents

- Agents intelligently decide the next action based on input and available tools, making applications adaptive and efficient.

Integration with External Tools

- LangChain connects with APIs, search engines, databases, and more, enhancing application capabilities beyond basic text generation.

Vector Database Support for Enhanced Retrieval

- Integrates with vector databases (e.g., Pinecone), enabling retrieval-augmented generation (RAG) for precise and informed responses in knowledge-based systems.

Core Features of LangChain:

Chains: A sequence of operations or prompts linked together.Memory: Helps models retain context over conversations.Tools: Extend functionality by integrating APIs, search engines, and databases.Agents: Dynamically decide which actions to take in a workflow.Document Loaders: Manage and process structured or unstructured text data.

Installing LangChain

LangChain is compatible with Python and can be installed using pip.

Prerequisites

- Python 3.8 or higher

- Virtual environment for isolated package management (optional but recommended)

Installation Steps

- Create and activate a virtual environment:

python3 -m venv langchain-env

source langchain-env/bin/activate # On Windows: langchain-env\Scripts\activate

- Install LangChain via pip:

pip install langchain openai

- For enhanced functionality:

pip install tiktoken python-dotenv

Using LangChain in Python

LangChain simplifies interaction with LLMs using its modular architecture. Below are key examples to get started.

Setting Up OpenAI API Key

Add your OpenAI API key in a .env file:

OPENAI_API_KEY=your_openai_api_key

Load the key in your Python script:

import os

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv("OPENAI_API_KEY")

# Initialize the LLM

llm = OpenAI(temperature=0.7, api_key=api_key)

# Generate text

prompt = "Write a poem about the ocean."

response = llm(prompt)

print(response)

LangChain Components and Their Usage

1. ChainsChains allow you to combine multiple tasks or prompts into a cohesive workflow.

Example: Summarizing and Question Answering

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

template = """Summarize the following text:

{text}

"""

prompt = PromptTemplate(input_variables=["text"], template=template)

chain = LLMChain(llm=llm, prompt=prompt)

text_to_summarize = "LangChain is a framework designed to build applications with LLMs. It supports chains, tools, memory, and more."

summary = chain.run(text=text_to_summarize)

print(summary)

Memory helps retain context across different stages of interaction, which is especially useful in conversational AI.

Example: Conversational Memory

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

memory = ConversationBufferMemory()

conversation = ConversationChain(llm=llm, memory=memory)

response1 = conversation.run("What is LangChain?")

response2 = conversation.run("How does it help in chatbot development?")

print(response2)

LangChain can integrate external APIs or tools, expanding the application's capabilities.

Example: Using a Search Tool

from langchain.tools import GoogleSearchAPIWrapper

search_tool = GoogleSearchAPIWrapper(api_key="your_google_api_key")

query = "Latest advancements in AI"

results = search_tool.run(query)

print(results)

Agents dynamically decide which actions to take based on input and available tools.

Example: Agent with Tools

from langchain.agents import initialize_agent, Tool

from langchain.tools import DuckDuckGoSearchAPIWrapper

search = DuckDuckGoSearchAPIWrapper()

tools = [

Tool(name="Search", func=search.run, description="Search for information")

]

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

response = agent.run("Who is the CEO of OpenAI?")

print(response)

Purpose of LangChain

LangChain bridges the gap between LLM capabilities and real-world applications. Here are some purposes it serves:

Streamlining Multi-Step Workflows: Automates complex tasks like document summarization and QA pipelines.Integration with External Tools: Incorporates APIs, search engines, and databases seamlessly.Enhanced Context Retention: Improves conversational experiences with memory mechanisms.Scalability: Supports dynamic workflows with agents and chains.

Using LangChain for Chatbots

LangChain excels in building chatbots by combining its memory and agent capabilities.

Example: Simple Chatbotlangchain.chains import ConversationChain

# Initialize memory and conversation chain

memory = ConversationBufferMemory()

chatbot = ConversationChain(llm=llm, memory=memory)

# Simulate a conversation

response1 = chatbot.run("Hi! What's your name?")

response2 = chatbot.run("What can you do?")

print(response1, response2)

from langchain.agents import initialize_agent, Tool

from langchain.tools import WeatherAPIWrapper

weather_tool = WeatherAPIWrapper(api_key="your_weather_api_key")

tools = [Tool(name="Weather", func=weather_tool.run, description="Get weather updates.")]

agent = initialize_agent(tools, llm, agent="zero-shot-react-description", verbose=True)

# User interaction

query = "What's the weather in New York?"

response = agent.run(query)

print(response)

Additional Features and Use Cases

1. Document LoadersLangChain supports a variety of document loaders for processing text data.

from langchain.document_loaders import TextLoader

loader = TextLoader("example.txt")

documents = loader.load()

print(documents)

You can create customized prompts for specific tasks.

from langchain.prompts import PromptTemplate

custom_prompt = PromptTemplate(

input_variables=["product"],

template="Write a creative ad for {product}."

)

response = llm(custom_prompt.format(product="smartwatch"))

print(response)

LangChain integrates with vector databases like Pinecone for retrieval-based tasks.

from langchain.vectorstores import Pinecone

# Connect to Pinecone

vectorstore = Pinecone(index_name="langchain-demo", api_key="your_api_key")

# Query the vector database

response = vectorstore.query("Explain the uses of LangChain.")

print(response)

Summary

LangChain is a versatile framework that enhances the power of LLMs by enabling structured workflows, retaining context, and integrating with external tools. From simple text generation tasks to building sophisticated chatbots, LangChain's modular design provides endless possibilities.

Whether you're an AI enthusiast or a seasoned developer, LangChain offers tools and abstractions to streamline your workflow. By understanding and leveraging its components, you can create robust applications that harness the full potential of large language models.